Methodology

WHAT IT IS

The Justice Snapshot is a rapid justice assessment, designed to enable local teams deployed on the ground to collect data across the justice system. It engages with justice stakeholders and national authorities to produce a common evidence base for planning short, medium and long term reforms in the justice system, while providing a monitoring and evaluation framework to measure progress.

Experience shows that justice data may be scattered, but they exist. The Justice Snapshot comprises data collection within an accessible and interactive website that anchors system stabilization in reliable baseline data and encourages national institutions to invest in data collection to inform and streamline their priorities for programming.

The data are collected for a 12 month period against an agreed cut-off date. In this Justice Snapshot of Sri Lanka, the data are for the year 2024 (from 1 January to 31 December).

WHAT IT DOES

The Justice Snapshot sets in context the environment within which justice operates. It depicts the way the justice system is designed to function in law; the flow of cases through the criminal and civil courts; justice system governance; and budget and expenditure.

The Snapshot provides a library of reference documents, from the laws in effect to national policy documents and project papers and research / academic studies, to provide a ‘go to’ resource. It enables users to cross check the data used in the visualizations with the source data in the Baseline.

Most importantly, it leaves national authorities and development partners with greater analytical and monitoring capacity than when it began. By embedding the vast array of information that has been collected, analyzed, and visualized within a website, the Snapshot can be updated to establish an increasingly accurate repository of data to inform both justice policy and the strategic interventions needed going forward.

WHY IT WORKS

It provides context, bringing together information about: the justice services provided within a governance framework; the arrests and imprisonment of large numbers of people; and the funding envelopes available to deliver justice generally and by institution. These data may better inform responses to provide justice and other social services where they are needed most.

It measures impact, compiling data from the most elemental level of individual courts, prisons and legal aid centers to identify weaknesses or gaps in personnel, infrastructure and material resources. This enables state planners and development partners to assess and address highly strategic building, equipping, and training needs and to monitor and evaluate incremental progress over time so as to scale up what works and remedy what does not.

It is accessible, quick and transparent, applying a tried and tested methodology and using live, interactive, visualizations to accentuate nuances in data, instead of a static report; while signaling discrepancies through data notes and making all source data available to users.

It is collaborative and transferable, engaging the justice institutions from the beginning in collecting and analyzing their own data to stabilize justice system operations. It is objective and apolitical, generating a series of data-driven accounts of the functioning of the justice system, ranging from security to infrastructure/resources, case-flow and governance – and weaving them together to illustrate how the whole of a country’s justice system is functioning, giving national authorities the tools to inform their interventions, rather than policy prescriptions.

HOW IT WORKS

The data are collected from the Ministry of Justice, Legal Aid Commission, Courts, Prisons, Department of Community Based Corrections (DCBC), Bar Association and Community Mediation Board with the consent of the principals of each institution. The data are owned by the respective institutions and are shared for purposes of combining them on the same site.

The methodology applied in the Justice Snapshot derives from the Justice Audit. The Justice Audit is distinguished from the Justice Snapshot in that the former takes place over a longer period of time and is able to serve as a health check of the justice system. It engages with governments to embark jointly on a rigorous data collection, analysis and visualization process to better inform justice policy and reform.

As with the Justice Audit, the Justice Snapshot does not rank countries, nor score institutions. Instead, it enjoins justice institutions to present an empirical account of system resources, processes and practices that allow the data to speak directly to the stakeholder. Justice Audits and Justice Snapshots have been conducted elsewhere at the invitations of the governments and stakeholders concerned in Afghanistan, Malaysia, Bangladesh, United States of America, South Sudan, South Central Somalia, Somaliland and Ethiopia.

The data collected in the Justice Snapshot comprise a breakdown of each institution’s resources, infrastructure and governance structures – and track how cases and people make their way through the system. All data are, in so far as it is possible, disaggregated by age, gender and physical disability. And all data are anonymised and Personally Identifiable Information (PII) removed.

The data in this Snapshot are self-reported. They are taken from annual performance reports prepared by the MOJ and each institution and further supplemented from questionnaires agreed with the institutions and distributed to the individual facilities in the districts. Police, AGD and probation did not return any data forms.

The supplementary data forms, once collected, are cleaned of obvious errors. Any gaps in data are indicated ‘-99’ unless the data show, for instance, 0 vehicles. Where the accuracy of data cannot be verified, or require further explanation, these are indicated in the ‘Data Note’ box. The cleaned data sheets appear by institution in the Baseline Data as these are the data visualised throughout the Justice Snapshot.

Note: the data captured will never be 100% accurate. Gaps and errors will occur especially in this first Justice Snapshot as those working on the frontline of the justice system are not used to collecting data systematically and especially not on a disaggregated basis. However, data collection and accuracy will improve over time and as systems are embedded within each institution.

The data are then organized and forwarded to a team of data visualisation experts who design the visualizations based on the data and populate the visuals with the data. Both the Justice Audit and Justice Snapshot are designed to be living tools rather than one-off reports. The purpose is to capture data over time and identify trends and so monitor more closely what works (and so scale up) and what does not (and so recalibrate or jettison).

HOW DATA MAY BE UPDATED AND SUSTAINED

The engagement with key institutional actors at the outset is not just a courtesy. The methodology aims to maximize the participation of all actors and encourage them to invest in their own data collection better to inform policy for the sector as a whole and leverage more resources for their own institution. From the moment it is formed, the Justice Snapshot Technical Committee (JSTC) takes ownership of the process, and so is central to this approach.

Following this Justice Snapshot, it is intended that the JSTC members encourage their respective institutions to collect disaggregated data, at regular intervals, using standardised data collection sheets. These data will be reported in line with existing procedures up the chain – and to an information management unit (IMU) to conduct successive Justice Snapshots going forward at 1-2 year intervals to monitor change over time.

Each Justice Snapshot follows a seven-stage process:

- Planning

- Framing

- Collecting

- Interrogating

- Designing

- Validating

- Transferring

| Stage | Activity | Pre-Jun | Jun | Jul | Aug | Sep | Oct | Nov | Dec | Jan26 | Feb |

| Planning | Iterative consultations with national stakeholders introducing Justice Audit methodology leading to consent from all institutional principals and launch in Feb 2025. | ||||||||||

| Justice Snapshot Technical Committee formed with nominations from principals of each institution. | |||||||||||

| Full team contracted and in place | |||||||||||

| Literature review and population of Library + Situational Overview (Justice in Law, Budgets and Governance) | |||||||||||

| Framing | Supplementary Data forms shared with institutions | ||||||||||

| Consultations with each institution on supplementary data form | |||||||||||

| Data integrity check | |||||||||||

| Presentation of Wireframe | |||||||||||

| Collecting | Supplementary data collected from courts, prisons, LAC, DCBC + national data from 2024 performance reports (including police and MoJ) and from CMB and BASL | ||||||||||

| Review of case data from institutional performance reports producing national caseflow from arrest to final appeal (criminal) and from initial action to execution (civil). | |||||||||||

| Interrogating | Justice Services: cleaning of returned Supplementary Data forms and formatting | ||||||||||

| Gaps analysis and draft Action Matrix drawn from cleaned Baseline data | |||||||||||

| Policy analysis and draft Roadmap | |||||||||||

| Designing | Justice Services: visualisation of baseline data at national, provincial and district levels | ||||||||||

| Caseflow visual (completing the Situational Overview) | |||||||||||

| Interactive Action Matrix | |||||||||||

| Roadmap | |||||||||||

| Draft Commentaries and technical manual | |||||||||||

| Validating | Consultations on draft Action Matrix | ||||||||||

| Presentation of draft Justice Snapshot | |||||||||||

| Incorporation of comments | |||||||||||

| Presentation of final Justice Snapshot | |||||||||||

| Transferring | Presentation of final Justice Snapshot Nomination of IMU | ||||||||||

| Hand over to IMU with technical manual |

1. PLANNING

A process of consultations with government and institutional stakeholders was undertaken by UNDP with an international consultant (team leader) prior to the start of the Justice Snapshot culminating with the appointment of a Justice Snapshot Technical Committee (JSTC).

The team was completed with the appointment of two more ICs (a senior data analyst and senior data visualisation expert) and two national consultants who carried out an extensive literature review (see: Library).

2. FRAMING

Field work started with a Data Integrity Check to determine how each institution generates, stores and communicates their data up the institutional chain. Supplementary data forms were created and refined by each institution, going deeper than the annual performance reports into how the institutions functioned at their most local level.

The forms were structured around key categories: material resources, human resources, infrastructure, physical space, and security conditions. Gender disaggregation was integrated directly into the forms, ensuring that disparities and gaps could be identified across institutional branches. The forms were then disseminated across the institutional landscape, save for Police, Attorney General’s Department (AGD), and Probation and Child Care Department who did not participate.

3. DATA COLLECTION

The team collected national data from institutional annual performance reports. The supplementary data forms were completed by the institutions in each of the 25 districts (save for Community Mediation Board and Bar Association which compiled their data centrally) and returned to the team for review.

4. INTERROGATION

The institutional data sheets submitted by the justice institutions formed the basis for the next stage of the process: the interrogation and cleaning of all received material. With hundreds of supplementary data forms to manage, a colour coded tracking device was established to identify what had been received, sent back and cleaned.

| PROVINCE | DISTRICT | PLACE | NAME | CATEGORY OF INSTITUTION | In | Out | Cleaned |

| Central | Kandy | Pallekelle * | Open Prison Camps | ||||

| Central | Kandy | Bogambara Prison | Remand Prisons | ||||

| Eastern | Batticaloa | Batticaloa | Remand Prisons | ||||

| Eastern | Trincomalee | Trincomalee | Remand Prisons | ||||

| North Central | Anuradhapura | Anuradhapura | Remand Prisons | ||||

| North Central | Polonnaruwa | Polonnaruwa | Remand Prisons | ||||

| North West | Kurunegala | Wariyapola | Work Camps | ||||

| Northern | Jaffna | Jaffna | Remand Prisons | ||||

| Northern | Vavuniya | Vavuniya | Remand Prisons | ||||

| Sabaragamuwa | Kegalle | Kegalle | Remand Prisons | ||||

| Sabaragamuwa | Kirilapura | Asmadala | Remand Prisons | ||||

| Sabaragamuwa | Kegalle | Ambepussa* – Paboda Meth Sevana | Work Camps | ||||

| Southern | Hambanthota | Angunakolapelessa Prison | Closed Prisons | ||||

| Southern | Galle | Boossa | Remand Prisons | ||||

| Southern | Galle | Galle | Remand Prisons | ||||

| Southern | Matara | Matara | Remand Prisons | ||||

| Southern | Hambanthota | Weerawila * | Work Camps | ||||

| Uva | Badulla | Taldena C.C.V.O. | Open Camp | ||||

| Uva | Badulla | Badulla | Remand Prisons | ||||

| Uva | Monaragala | Monaragala | Remand Prisons | ||||

| Western | Colombo | Welisada Prison | Closed Prisons | ||||

| Western | Gampaha | Mahara Prison | Closed Prisons | ||||

| Western | Colombo | Prison Headquarters | Head Quarter | ||||

| Western | Colombo | Welikada; Borella C.C.V.O. | Open Camp | ||||

| Western | Colombo | Colombo | Remand Prisons | ||||

| Western | Colombo | New Magazine | Remand Prisons | ||||

| Western | Gampaha | Negombo | Remand Prisons | ||||

| Western | Kalutara | Kalutara | Remand Prisons | ||||

| Western | Colombo | Homagama (Watareka) | Work Camps |

4.1 Application of the Four-Eyes Principle

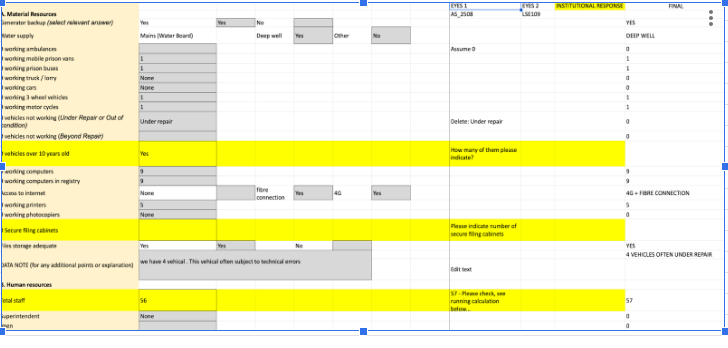

Each individual form was reviewed using the Four Eyes principle (i.e. two sets of eyes on each form). Forms were checked for missing information, unclear entries, inconsistencies and errors. Forms requiring correction were returned to the facility of the institution concerned, updated, and resubmitted. In a number of cases, the data cleaning team made assumptions based on the data entries (for instance where a ‘ – ‘ was entered or the data value was left blank, a ‘0’ was inserted in its place or ‘-99’ (no data)). The data once cleaned was entered in the FINAL column (see extract below) and uploaded onto a consolidated data sheet for that institution (which can be viewed in the BASELINE DATA).

4.2 Treatment of Missing or Unreliable Data

Where fields were left blank or where the reviewers determined that the information provided could not be relied upon, the value “-99” was entered. This followed the convention already established during the planning stage and allowed analysts, and prior to the deadline, institutional reviewers, to distinguish between:

- data that were genuinely unavailable

- data that existed but had not been provided

- data that had been deemed unreliable

Once the submission deadline had passed any group that had not returned their forms were listed as having done so. Any missing forms from institutions were named and coded but left blank.

4.3 Consolidation and Organisation of the Data

After the EYES 1 and EYES 2 reviews were complete, all processed documents were transferred into a dedicated “Consolidated” folder. Within this folder, data were organised by institution, with individual files created for each body to allow for traceability and to mirror the architecture of the final dataset.

Forms were then transcribed into structured spreadsheets, maintaining a consistent sequence of fields across institutions. The team applied a unified coding system to harmonise terminology and ensure comparability between institutions whose forms were formatted differently. This step was essential for establishing a coherent national dataset capable of being analysed and, later, visualized.

The consolidation stage thus served two purposes:

- to gather the data into one structured repository, and

- to translate any institution-specific reporting styles into a standardised format suitable for cross-institutional analysis.

DESIGNING

The visualization of data (often dense and complex) ensures the viewer can stay in the blue water observing the whole justice system, while at the same time being able to dive down into the weeds to see what is happening there. The viewer is urged to refer back to the Baseline data (the ‘weeds’) to check the view being offered. Data Notes either come from the institutions themselves, or are entered by the team to shed light on the data shown.

The data collected (national and supplementary) were then analysed to identify gaps and blockages in the justice system and remedial activities needed to address them. A series of commentaries for each institution were drafted to highlight gaps as well as to accompany an interactive Action Matrix showing areas for investment.

The Roadmap signposts the way forward in monitoring progress against policy targets and programme goals over five years (2026-2030). The team analysed the policy papers produced by government as they referred to justice (from the National Policy Framework: A Thriving Nation A Beautiful Life to the National Legal Aid Policy 2016; and from Prison Overcrowding: Proposed Short-Mid-Long term Plan to Overcome the Challenge to the National Strategic Plan for Social Cohesion and Reconciliation etc) and aligned them with investment options set out in the Action Matrix to inform further consultations.

VALIDATING

A draft of the completed Justice Snapshot was presented to the JSTC as well as to donors and key stakeholders in Colombo. Comments were noted and incorporated in a final version.

TRANSFERRING

A manual was developed to assist the information management unit update the Justice Snapshot going forward with technical assistance offered virtually to accompany the unit as needed.